Table of Contents

Inside the AI Economy: How Circular Investment and Energy Demand Are Reshaping Business

- 10 min read

- Authored & Reviewed by: CLFI Faculty

AI Unicorns — Valuation Leaderboard

Leading AI companies by private valuation.

| Rank | Company | Valuation | Country | Specialisation |

|---|

Artificial Intelligence has become the new frontier of investment. From hyperscale data centres to AI chipmakers, valuations are surging to levels unseen since the dot-com era. Yet, beneath the optimism lies an essential question: how sustainable is this growth when the true demand for compute remains largely untested?

A major feature of the current AI landscape is how long the leading companies are choosing to remain private. Firms like OpenAI ($500B), Anthropic ($183B), and Databricks ($100B) continue to raise capital at extraordinary valuations without entering public markets. This reflects a broader shift of capital from public to private markets, where deep-pocketed investors—sovereign funds, private equity, and corporate ventures—are willing to fund growth without the scrutiny of quarterly reporting.. It’s a model that blurs the line between venture financing and industrial strategy, but it also concentrates power among a small circle of investors and insiders—shaping the AI economy before it ever reaches public accountability.The Demand Side: Tokens, Energy, and Applications

The valuation of today’s AI economy is not simply about how many users interact with chatbots. It depends on how intensive those interactions are. Generating a text prompt to find the best restaurant in a city is trivial compared with prompting a model such as Sora to design an entire video sequence. The latter can draw hundreds or even thousands of times more energy —about as much as running a microwave for an hour— just to make a few seconds of AI-generated video, according to MIT Technology Review. This “token intensity” defines the demand side of AI infrastructure. As new multimodal agents and video-generation models emerge, token usage per user could multiply ten or hundred-fold. That expectation is now underwriting hundreds of billions in capital deployment into chips, data-centre land, and power supply chains, on the assumption that compute demand will soon match the investment curve. This is the core of the current investment thesis. Yet it is also the greatest unknown.Definition:

Token

In artificial intelligence, a token represents a fragment of data — usually a word, syllable, or character — processed by a language model. The more complex a prompt or task, the more tokens it consumes, which directly affects the compute cost and energy demand of the AI system.

The Compute-Energy Nexus Driving the AI CAPEX Investment:

Let’s first define the two layers of the AI economy.

The primary market builds the models — companies like OpenAI, Anthropic, xAI, and Google DeepMind. This layer is capital-intensive, loss-making, and increasingly dominated by a few firms that can afford the vast compute, talent, and data costs required at the frontier.

The secondary market applies those models. Here, AI begins to touch the real economy — in search, productivity, research, customer service, and coding tools. Revenue is generated downstream through subscriptions, APIs, and enterprise integrations. In simple terms, the primary market burns capital, while the secondary market converts it into cash flow.

These dynamics around capital deployment, long-dated investment cycles, and growth are examined within the Corporate Finance Executive Course.

What makes this cycle particularly distinctive is its deep connection to energy — a connection that goes far beyond metaphor. Every interaction with an artificial intelligence system, from a simple text prompt to a complex video-generation request, draws upon vast amounts of computational power. That power, in turn, is inseparable from the physical world of energy supply — from the grid, to the data centre, to the silicon that translates voltage into thought. The token economy, often described in abstract digital terms, is in reality a vast ecosystem running on electricity. Each model response, each inference, each generated token is ultimately a small act of energy conversion — the modern equivalent of steam in the industrial age.

Training and inference at scale demand enormous energy inputs, leading to an emerging narrative where AI becomes a macroeconomic driver of national energy strategies.Definition:

Inference

In artificial intelligence, inference refers to the process by which a trained model generates an output — such as a response, prediction, or image — from new input data. Unlike training, which teaches the model, inference is the execution phase that applies learned patterns in real time, often determining speed, cost, and energy efficiency.

OpenAI’s president recently remarked, “We are not ready for the demand in an AI-powered economy.” Behind that statement is a new policy mindset: nations are being pushed to digitise every remaining analogue system — from public administration to healthcare and education — in order to stay competitive.

In this shift, governments become the biggest customers of AI, not measured by software licenses but by population reach. Each national rollout can mean tens or even hundreds of millions of users, making large-scale public-sector adoption one of the most powerful narratives now driving investor confidence and the ambitions of AI’s leading companies.

At its annual symposium, Blackstone described artificial intelligence as the defining growth engine of this decade. The firm pointed to a sixfold surge in capital expenditure by hyperscalers, now reaching about 364 billion dollars or roughly one percent of U.S. GDP, as the financial backbone of this new industrial wave.

Definition:

Hyperscaler

A hyperscaler is a company that operates large-scale cloud infrastructure capable of supporting massive data storage and computing workloads. These firms — such as Amazon Web Services, Microsoft Azure, Google Cloud, and Oracle — build and manage the global networks of data centers that power artificial intelligence, digital services, and enterprise cloud computing at scale.

That investment is moving directly into the infrastructure behind AI, including advanced chips, large-scale data centers, and the expansion of the power grid needed to sustain them.

Blackstone’s strategy follows a familiar pattern from earlier industrial revolutions, often called the “picks and shovels” approach. Rather than chasing the volatile valuations of model developers and software firms, the company is investing in the physical foundations of AI growth, from energy systems and cooling networks to the data-center capacity on which the entire ecosystem relies. After two decades of flat electricity demand, U.S. consumption is now expected to increase by nearly forty percent, turning energy from a background input into the key constraint on global AI expansion.

Capital Is a Resource. Allocation Is a Strategy.

Learn more through the Executive Certificate in Corporate Finance, Valuation & Governance – a structured programme integrating governance, finance, valuation, and strategy.

The Scale of the Build-out

OpenAI, Nvidia, Oracle, and AMD have dominated recent headlines — not only for their breakthroughs but for the intricate web of partnerships now forming between them. These cross-agreements have sparked what many analysts call the “Circular AI Economy” debate: are these companies genuinely expanding the frontier of innovation, or are they amplifying one another’s valuations by trading capacity, compute, and capital within the same ecosystem?

During a series of high-profile interviews and what seems like a well coordinated roadshow, financiers like Jamie Dimon (JP Morgan), and the CEOs of top tech & AI companies, converged on a single point of consensus. Energy, they warned, is the defining constraint of the AI era. We are still in the very first chapter of infrastructure build-out — what AMD’s Lisa Su recently referred to as “the first gigawatt” of true AI-scale data centres now being constructed.

Executive Perspectives on AI and Energy

“We’re seeing massive demand for more compute, and compute is the foundation of that. These are huge gigawatt-scale deployments — and power is the resource everyone is chasing.”

“We need as much computing power as we can possibly get. The whole industry is trying to adapt to the level of demand for AI services we see coming.”

“This is the first ten gigawatts — an engineering project of unprecedented scale and complexity. Every interaction, every image, every word will soon be touched by AI.”

“For $2 billion of expense, we’ve seen roughly $2 billion of benefit. It affects risk, fraud, marketing, and service — and it’s only the tip of the iceberg.”

“AI is already straining the global power supply. Within a few years, access to clean, reliable energy will determine where the next generation of models are trained.”

For context, they are still scaling the first one or two gigawatt (GW) AI data center.

OpenAI and its partners are expected to move toward two or three gigawatts next year, but this remains the early stage of an unprecedented global rollout.

Experts estimate that each gigawatt of data-centre capacity represents roughly $50 billion in combined investment—covering chips, land, power infrastructure, and cooling. As per their narrative, power has become the new constrained resource, turning energy strategy into a macroeconomic variable. This is the reason behind OpenAI and other hyperscalers reportedly targeting ten gigawatts over the next five years.

As we enter the final quarter of 2025, AI leaders now account for an astonishing share of U.S. growth: the handful of trillion-dollar firms driving this wave are responsible for roughly 40 percent of total U.S. GDP growth this year, and for nearly 80 percent of the gains in the U.S. stock market to date. The economic footprint of the sector has become systemic.

JPMorgan ’s chief executive, Jamie Dimon, comment underscored how deeply AI is already embedded in mainstream corporate infrastructure. The bank employs more than 2,000 specialists and invests about $2 billion a year in AI yielding a comparable $2 billion in measurable annual savings through automation of risk, fraud detection, marketing, and client service. “It’s the tip of the iceberg,” Dimon said. “We’re getting better and better at it … our managers and leaders have to learn how to use this thing.”

The intention behind his remarks is that AI is no longer an R&D experiment but a productivity driver at scale, and that adoption is now spreading across every major financial and industrial enterprise.

Still, this optimism mirrors previous bubbles. In the late 1990s, investors built fibre networks far faster than end-user demand justified. Eventually the capacity was absorbed, but only after a painful correction. The same dynamic may now apply to GPUs and data centres. Capital is being deployed in anticipation of use-cases that have yet to prove durable economics.

The Market Psychology Behind AI Valuations

Market enthusiasm tends to overcorrect on both sides. In the late 1990s, investors overbuilt the internet’s physical layer — laying down massive fibre networks long before real demand materialised. Eventually, that capacity was used, but only after a painful correction. The same could apply today: billions are being deployed into data centres, GPU clusters, and AI startups under the assumption that compute demand will soon match supply. Investors understand the risk, but the logic of momentum and competition drives a form of collective overconfidence. No fund wants to be the one that missed “the obvious trade.” In this environment, capital deployment becomes a signalling exercise — a race to prove conviction. As in previous cycles, exuberance and peer pressure can override rational capital allocation, at least temporarily.Historical Echoes: The Dot-Com and Post-COVID Parallels

We’ve seen similar cycles before. The dot-com boom overbuilt infrastructure. The 2008 financial crisis was fuelled by over-leverage in real estate and the related mortgage market. And during the COVID lockdowns, record liquidity drove the SPAC and meme stock mania. Each cycle was followed by a sharp correction. When we look at the charts of how severe these corrections were and how long they lasted, it might look like each correction period was shortening. Like the market wash the excess quicly and moves back to the long-term trend of higher highs. But the effect in the real world out of the trading floor might take a bit longer to recover. After 2008, property bubbles and fragile banks in Spain, Greece, and Italy took years to unwind. Balance sheets were rebuilt through bailouts, recapitalisations, and painful restructuring before confidence returned. Today’s AI market could be following a similar script, with compute playing the role of property or fibre — the critical infrastructure of a digital future still taking shape.Are We Witnessing One of the Historicoal Bubbles?

Since the release of ChatGPT in November 2022, Nvidia’s market capitalisation has multiplied several-fold, transforming GPUs into the new oil of the digital economy.

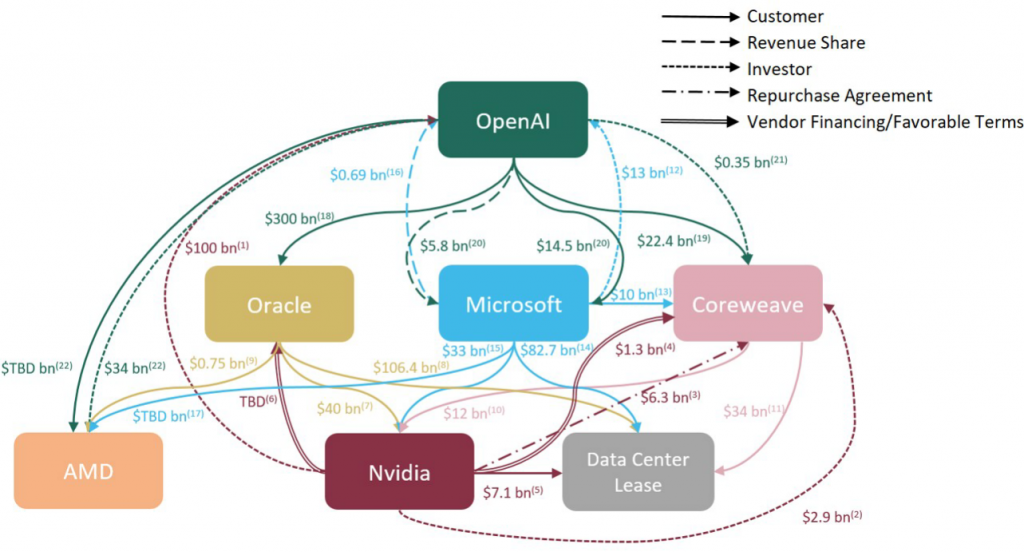

Recent partnerships — between OpenAI, Nvidia, AMD, Oracle, and even secondary players like CoreWeave — have created what analysts are now calling the “Circular AI Economy.” In just a few months, the industry’s largest companies have entered a dense web of reciprocal deals: OpenAI agreeing to purchase tens of billions of dollars’ worth of AMD chips while taking a stake in AMD itself; Nvidia investing up to $100 billion in OpenAI to co-develop new data-centre infrastructure; and OpenAI committing nearly $300 billion to run its workloads on Oracle’s cloud. Each agreement feeds the next — capital, chips, and compute flowing in a closed circuit that sustains valuation momentum across the same handful of firms.

Morgan Stanley recently published a report titled “AI: Mapping Circularity,” which examines how companies at the center of the AI boom — including OpenAI — are increasingly tied together through mutual funding, shared revenue, and cross-ownership. The report highlights that suppliers are now financing their own customers, blurring traditional market boundaries and creating a highly interconnected ecosystem. The following chart from Morgan Stanley illustrates this circular flow of capital and dependency surrounding OpenAI and its major partners.

Source: Company Data, Morgan Stanley Research.

Larry Ellison, who owns about 41% of Oracle, saw his fortune climb by approximately US $100 billion to US $392.6 billion (Forbes, Sept 2025). Elon Musk remains ahead at US $439.9 billion, but the gap has narrowed considerably.

But that’s on the promise side. On the spending side, the scale of investment required to stay in the AI race has become staggering. The world’s largest technology firms are pouring unprecedented sums into compute, data centers, and model integration simply to remain competitive.

In 2025 alone, Amazon is projected to spend around $100 billion on AI infrastructure —more than the GDP of some countries— followed by Microsoft at $80 billion, Alphabet at $75 billion, and Meta at $60 billion. Even Apple, typically cautious about public AI bets, is investing over $10 billion, while Tesla channels roughly $5 billion into autonomous and “world model” systems.

These numbers illustrate how quickly AI has become a capital-intensive arms race, where access to compute and energy defines strategic survival. A striking example is the recent report that OpenAI is preparing to launch an AI-powered web browser, a direct move into Alphabet’s core territory and a potential threat to Google Chrome’s dominance. The message is clear: in the current phase of the AI race, even trillion-dollar incumbents can be disrupted almost overnight.

From “Software Eats the World” to “Software Eats Labour”

Venture capital perspectives are also shaping this narrative. A16Z, famous for its “Software Will Eat the World” thesis, now argues that software will eat labour.

In their view, the numbers speak for themselves: U.S. labour is worth about $13 trillion a year, compared to roughly $300 billion for the global software market. If AI can turn capital into computing power that “does the job of labour,” the potential impact is enormous — allowing companies to sell results rather than just tools.

The implication is clear. The market for AI is no longer limited to office tasks or digital workflows; it now reaches across the entire global labour economy. It also raises the question of how much this vision is shaped by venture capitalists and financiers who operate from positions of privilege and inherited security. The world of private markets is still far from a true meritocracy. It remains closed, self-referential, and largely uniform. Access often matters more than ability, and those directing the flow of capital are often the furthest removed from the realities of the work they aim to automate. Perhaps that is a discussion for another time.

Sequoia Capital describes the current moment as a “cognitive revolution” on par with the industrial revolution. Their analysis projects a $10 trillion opportunity as AI expands from software into services—automating tasks once performed by lawyers, nurses, engineers, and analysts. Sequoia notes that each major industrial epoch required time for specialization: from the first steam engine to the first factory took 67 years; from the first factory to the assembly line, 144. AI may compress that timeline to a decade.

Sequoia describes the new economy in simple terms: “flops per knowledge worker.” In other words, how much computing power each employee uses as AI tools become part of daily work. They estimate that this figure could grow tenfold or even a thousandfold per person as AI agents take on more tasks. This expectation reinforces the investment frenzy around chips, datacentres, and energy infrastructure.

On the CEO’s Role in the Age of AI

On 1st October 2025, in an interview on Bloomberg TV, Bob Sternfels, McKinsey Global Managing Partner, said “The role of a CEO is never to be comfortable. You have to play offense and defense at the same time — adopting new technologies while building resilience for the shocks that will inevitably come. There’s excitement about what AI can do — from drug discovery to customer experience — but also a recognition that volatility is the new normal. This won’t ‘settle down’; this is the new operating environment.”

This statement marks a clear message, the conversation around AI has shifted from possibility to urgency. AI is now a CEO-level agenda item, requiring leaders to take a proactive approach in order to either innovate their product and services, or at minimum improve efficiency across different role and departments.

The below survey shows how AI is being adopted accross different business departments.

Gen AI adoption by function — Overall (% of respondents)

CLFI| Business function | Overall (%) |

|---|---|

| Marketing and sales | 42 |

| Product and/or service development | 28 |

| IT | 23 |

| Service operations | 22 |

| Knowledge management | 21 |

| Software engineering | 18 |

| Human resources | 11 |

| Risk, legal, and compliance | 11 |

| Strategy and corporate finance | 11 |

| Supply chain / inventory management | 8 |

| Manufacturing | 2 |

| Using Gen AI in at least 1 function | 71 |

Note: “Overall” represents the cross-industry share of respondents regularly using Gen AI in each function.

Data recreated from The State of AI: How organizations are rewiring to capture value by McKinsey.

What Should Business Leaders Look at in AI

For CEOs and business leaders, the question is no longer whether AI matters but how to use it in ways that deliver measurable results. The gap between excitement and outcomes is growing, yet the innovation remains real. The companies that are succeeding are not only those creating the models but also those integrating AI into their operations, from automating customer service and improving forecasting to enhancing logistics and creating more personalised digital experiences.

To use AI competitively, leaders should focus on economic performance rather than technical capability. The key test is how efficiently AI turns computing power into cash flow. Whether a business licenses existing models or builds applications on top of them, the fundamentals remain the same: margins, customer retention, and return on compute. For instance, at the model level, figures such as revenue per thousand tokens or gross margin per API call show whether usage is profitable or merely scaling at a loss. At the application level, measures like net dollar retention and workflow penetration —how much customers spend after a year and how many tasks the AI tool actually improves— are the best signs of lasting business value.

Capital Is a Resource. Allocation Is a Strategy.

Learn more through the Executive Certificate in Corporate Finance, Valuation & Governance – a structured programme integrating governance, finance, valuation, and strategy.

Cycles and Corrections

Every cycle in financial history begins with a powerful narrative and ends with a reckoning. AI’s narrative — that intelligence itself can be industrialised — is arguably the most ambitious of all. The challenge for investors and policymakers alike is to differentiate between short-term hype and long-term transformation. When the correction comes, as it always does, the survivors will be those building not just for valuation, but for real and lasting demand.

Now, as this system scales, a new narrative is taking shape. Large language models or LLMs are no longer the fancy term, the next justification for another wave of energy and token consumption is already here — “world models.” Promoted by Elon Musk’s xAI and rivals at Meta and Google, these models aim to simulate entire physical environments, merging AI with robotics, gaming, and real-world physics. Nvidia told the Financial Times that the potential market for world models could be almost the size of the present global economy.

The question isn’t whether we’ll use AI…it’s whether we can stop ourselves from asking.